The year is 2025. Israel, a part of the Western World, is performing genocide on Palestinians, slaughtering and starving them to death by tens of thousands. The Israelis are doing to Palestinians exactly what the Nazis did to the Jews. The oppressed became the oppressors. Never again turned out to be an empty slogan. Apparently it’s OK to Israelis if it’s them who do the mass killings.

Llama 4 smells bad

Meta has distinguished itself positively by releasing three generations of Llama, a semi-open LLM with weights available if you ask nicely (and provide your full legal name, date of birth, and full organization name with all corporate identifiers). So no, it’s not open source. Anyway, on Saturday (!) May the 5th, Cinco de Mayo, Meta released Llama 4.

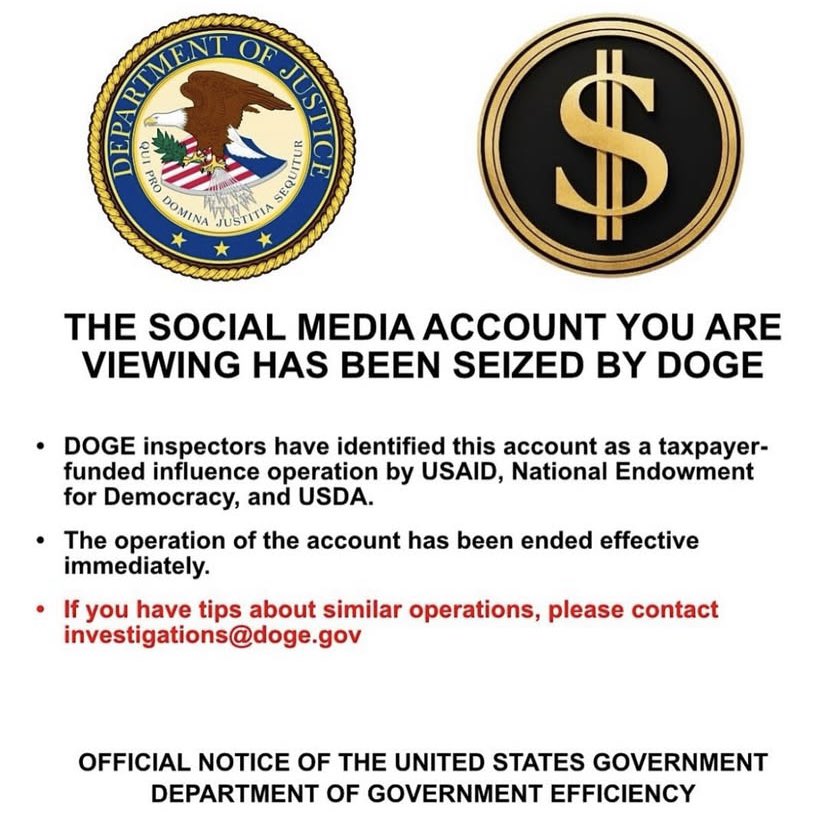

SEIZED BY DOGE

Beware of Musk’s chatbot, Grok

Elon Musk bought Twitter and used it to help elect Trump. He also controls a popular chatbot, which he might use to exert political influence when he needs to.

They are selling dollars for 69 cents now

Back in October 2024 we posted about the US presidential election probabilities and if someone took the gamble, it backfired. To make up for it, here’s a new tip.

Paper review: X-Sample Contrastive Loss

The paper, X-Sample Contrastive Loss: Improving Contrastive Learning with Sample Similarity Graphs, with Yann LeCun as the last author, is about similarity signals between samples, typically images. The point is to learn good representations (embeddings) by contrasting similar and dissimilar images.

They are selling a dollar coin flip for 36 cents

Today the implied probability of Kamala Harris being elected as the president of the United States reached 36 percent on Polymarket. This is interesting because it’s the result of a concerted effort to manipulate the odds, and in wider context, to buy the election. While it is sad, it also presents an opportunity. Should you attempt to take some of the that money?

Large language models in 2024

This is a high-level review of what’s been happening with large language models, to which we’ll also refer as the models, or chatbots. We focus on how expanding the context length allowed the models to rely more on data in prompts and less on the knowledge stored in their weights, resulting in fewer hallucinations.

How many letters R are there in the word STRAWBERRY?

Some people still post about the LLMs’ inability to tell how many particular letters are in a given word. Let’s take a look and try to understand the basic issue here.

LLM snake oil drama

For the last year or so, OpenAI was the main provider of the drama in the AI world. Now there’s a new player, although it shapes to be a one hit wonder. Here’s a short overview of what happened.