In part one we attempted to show that fears of true AI have very little to do with present reality. That doesn’t stop people from believing: they say it might take many decades for machine intelligence to emerge.

How to dispute such claims? It is possible that real AI will appear. It’s also possible that a giant asteroid will hit the earth. Or a meteorite, or a comet. Maybe hostile aliens will land, there were a few movies about that too.

“I want to believe”

Luke Muehlhauser, director of Machine Intelligence Research Institute:

We advocate more work on the AGI safety challenge today not because we think AGI is likely in the next decade or two, but because AGI safety looks to be an extremely difficult challenge more challenging than managing climate change, for example and one requiring several decades of careful preparation.

The greatest risks from both climate change and AI are several decades away, but thousands of smart researchers and policy-makers are already working to understand and mitigate climate change, and only a handful are working on the safety challenges of advanced AI.

Just one note here: climate change is science. Artificial General Intelligence, as of now, is science-fiction.

A few decades

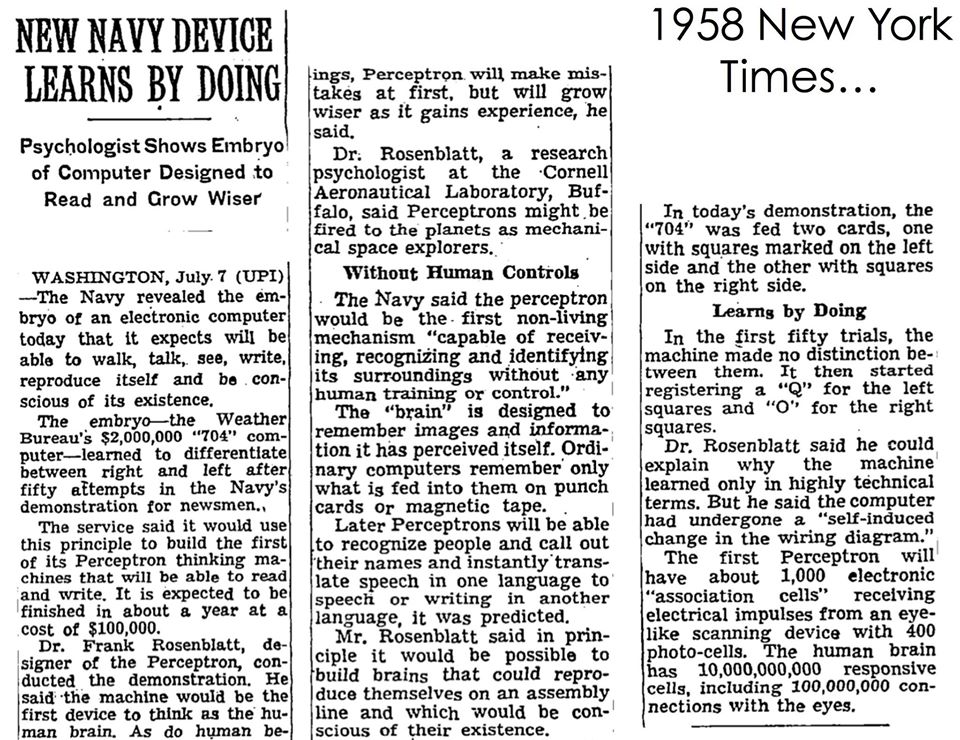

We don’t know what will happen in a few decades, but we can check what happened a few decades ago. Let’s go back to 1958 and dr Franken, er, Frank Rosenblatt’s, invention. New Yorker from December 6th has a story about the perceptron, a new electronic brain which hasn’t been built, but which has been successfully simulated on the I.B.M. 704:

It interacts with its environment, forming concepts that have not been made ready for it by a human agent. (…) It can tell the difference between a cat and a dog, although it wouldn’t be able to tell whether the dog was to the left or right of the cat.

And to think that Kaggle held the cats and dogs competition in 2014!

Earlier in 1958, on July 7th, New York Time published an article about the perceptron. Navy expected it to be able to walk, talk, see, write, reproduce itself and be conscious of its existence, although at the time the achievements were more modest: it learned to differentiate between right and left after fifty attempts.

Some fiction

Even before World War II robots were a menace. The Slate reports:

In the autumn of 1932, a British inventor named Harry May invited some friends over to see a demonstration of his latest invention, a robot called Alpha that could fire a gun at a target. Operated by wireless control, the robot sat lifeless in a chair on one side of the room. May placed a firearm in the robots hand and made his way to the other side of the room to set up a target.

With the inventors back turned, the two-ton Alpha slowly rose to his feet and pointed the gun with his metallic arm. The men shouted warnings while the women screamed in terror. The inventor turned and was startled to see that his robot had come to lifeand was now pointing a gun directly at him. Alpha lunged forward.

One more thing you need to know: Alpha was black. Naturally, having disposed of the creator, he went on to consummate his victory.

Anyway, they lived happily ever after, and their numerous offspring will be attending the Second International Congress on Love and Sex with Robots in Malaysia.

UPDATE: Take a look at these state-of-the-art robots: https://www.youtube.com/watch?v=g0TaYhjpOfo. We’re already in love! Or is this compassion?

God complex

In our opinion, sentience means life. Not in a biological ingest-and-digest sense, but life nonetheless. What a grand thing to do: create life (by means other than sex). We’d effectively become gods to those created.

Image credits: Michelangelo, Second International Congress on Love and Sex with Robots.

Movies and other sci-fi works accustomed us to robots which are self-aware and intelligent, but still second category beings - this is quite natural when a human is the creator. No wonder that in this kind of relation the fear appears that the slaves will turn against their masters.

Stanis?aw Lem, a famous Polish science-fiction writer, presented a different view in his Fables for Robots. There, the universe is populated by robots. A strange soft watery creature appears only in one fable: How Erg the Self-Inducting Slew a Paleface.

To sum up: creating real intelligence would be an enormous ego boost. That’s the basic reason why the subject is so attractive. Also, real AI would have many practical applications in robotics.

Mechanistic view of the mind

Many people think in this way: the brain has x neurons. Current neural networks have y neurons. If the computers get faster at the current rate, we’ll have networks with x neurons in z years, therefore we’ll be able to match abilities of the brain. Brain is synonymous with mind here.

As a remedy, take a look at an article On the Imminence and Danger of AI, by Adam Ierymenko. The author says he formally studied both computer science and biology, and spent many years in a previous “life” working on evolutionary approaches to machine learning and AI.

The brain is not a neural network, and a neuron is not a switch.

The brain contains a neural network. But saying that the brain “is” a neural network is like saying a city “is” buildings and roads and that’s all there is to it.

The brain is not a simple network. It’s at the very least a nested set of networks of networks of networks with other complexities like epigenetics and hormonal systems sprinkled on top.

You can’t just make a blind and sloppy analogy between neurons and transistors, peg the number of neurons in the brain on a Moore’s Law plot, and argue that human-level AI is coming Real Soon Now.

Even with all these complexities, that’s still a mechanistic view. We believe there is more to mind and sentience than underlying flesh. That’s why we’re skeptical about possibility of true machine intelligence.

Clear and present danger

It is telling that among people who actually do machine learning most aren’t afraid of superhuman AI (even if they believe it’s possible). Take Andrew Ng, for example:

I think the fears about evil killer robots are overblown. Theres a big difference between intelligence and sentience. Our software is becoming more intelligent, but that does not imply it is about to become sentient.

The biggest problem that technology has posed for centuries is the challenge to labor. For example, there are 3.5 million truck drivers in the US, whose jobs may be affected if we ever manage to develop self-driving cars. I think we need government and business leaders to have a serious conversation about that, and think the hype about evil killer robots is an unnecessary distraction.

In the US, truck driver is the most common job, after salesman and manager. Now consider self-driving trucks, as envisioned in The Simpsons in 1999.

Image credit: The Simpsons.

What if you’re not a truck driver - is there anything to worry about? An article by Neil Lawrence sheds some light on the subject.

People now leave a trail of data-crumbs wherever we travel. Supermarket loyalty cards, text messages, credit card transactions, web browsing and social networking. The power of this data emerges, like that of capital, when its accumulated. (…) Where does this power come from? Cross linking of different data sources can give deep insights into personality, health, commercial intent and risk. The aim is now to understand and characterize the population, perhaps down to the individual level.

For example, it seems that Facebook can assess your personality better than your family:

Compared with the accuracy of various human judges (…), computer models need 10, 70, 150, and 300 Likes, respectively, to outperform an average work colleague, cohabitant or friend, family member, and spouse.

The same, of course, goes to the NSA and other similar entities. By the way, there’s a data mining company, Palantir, specializing in aforementioned cross linking of different data sources. It provides solutions to US government agencies.

The bottom line: A CIA-funded firm run by an eccentric philosopher has become one of the most valuable private companies in tech, priced at between $5 billion and $8 billion in a round of funding the company is currently pursuing.

But that’s just internet, we have “real” lives, right? Real lives with smartphones at the center. Coming up are smart homes and internet of things. Things like Xbox and smart TVs. Someone compared excerpts from Samsung SmartTV privacy policy and Orwell’s 1984:

Left: Samsung SmartTV privacy policy, warning users not to discuss personal info in front of their TV Right: 1984 pic.twitter.com/osywjYKV3W

— Parker Higgins (@xor) February 8, 2015

They say that in Soviet Russia, TV watches you. Elsewhere, it just listens. Other devices do the watching.

TWO-YEARS-LATER UPDATE: Surprise, surprise - CIA Tapping Microphones On Some Samsung TVs

UPDATE: Linus Torvalds, the creator of Linux, expressed this pleaseantly sober view on the dangers of AI in his 2015 Slashdot interview:

Some computer experts like Marvin Minsky, Larry Page, Ray Kuzweil think A.I. will be a great gift to Mankind. Others like Bill Joy and Elon Musk are fearful of potential danger. Where do you stand, Linus?

I just don’t see the thing to be fearful of.

We’ll get AI, and it will almost certainly be through something very much like recurrent neural networks. And the thing is, since that kind of AI will need training, it won’t be “reliable” in the traditional computer sense. It’s not the old rule-based prolog days, when people thought they’d understand what the actual decisions were in an AI.

And that all makes it very interesting, of course, but it also makes it hard to productize. Which will very much limit where you’ll actually find those neural networks, and what kinds of network sizes and inputs and outputs they’ll have.

So I’d expect just more of (and much fancier) rather targeted AI, rather than anything human-like at all. Language recognition, pattern recognition, things like that. I just don’t see the situation where you suddenly have some existential crisis because your dishwasher is starting to discuss Sartre with you.

The whole “Singularity” kind of event? Yeah, it’s science fiction, and not very good SciFi at that, in my opinion. Unending exponential growth? What drugs are those people on? I mean, really..

It’s like Moore’s law - yeah, it’s very impressive when something can (almost) be plotted on an exponential curve for a long time. Very impressive indeed when it’s over many decades. But it’s still just the beginning of the “S curve”. Anybody who thinks any different is just deluding themselves. There are no unending exponentials.